As we move from Discovery to Alpha we are now starting to test our technology options. This blog explains why we have chosen OpenStack, Ansible, Docker containers and Kubernetes.

In the last 15 years the technology community has seen the evolution of systems grow from clusters on metal to incredibly distributed systems on virtualised platforms, in dozens of globally disparate data centres. In order to manage the complexities this introduces – notably the CAP theorem – technology was introduced to solve problems new paradigms introduced.

Sadly, the technical answer to some of these problems added further complexity. While it may have solved the problem at the time, and maybe created its own industry, the choice was not collectively vetted with critical thinking and pragmatism. Compounding this problem is the fact few organisations employed engineers with this skillset. After all, they are expensive. Furthermore, few organisations owned both infrastructure on the scale of tens of thousands of physical nodes and multiple distributed systems with aggressive release schedules.

Virtualisation

One of the technology shifts we have seen over this time is virtualisation. There are many reasons platform virtualisation succeeded. But the idea of operating-system virtualisation, known as containers, emerged. Containers are far more resource efficient as well as less latent by orders of magnitude. However, platform virtualisation had already gained mind share and the formidable container early on – Solaris Zones – never really caught on.

Linux containers with the associated kernel namespaces were mainly introduced after the industry’s leading Linux platform RHEL5 was released. This allowed the trend of platform virtualisation to continue, and configuration management systems designed to maintain long-lived virtualised platform instances maintained strength. However, long-lived instances did not agree with the idea of cloud computing made famous by Amazon Web Services. A gap in efficiency arose.

Circa 2014 the immutable pattern – infrastructure is data and everything else is replaced on each deployment – was a trending infrastructure’s goal. What is now a well-known name in the container industry, or Docker, was gaining steam simply by filling the void. Kubernetes, a container orchestration engine, was released by Google. Kubernetes’ interface is YAML, a declarative markup language. Tying this all together was obviously straightforward with the pragmatic and idempotent agentless configuration management system known as Ansible. By no coincidence, Ansible’s interface is YAML.

These technologies handsomely complement each other and provide a rich solution to today’s trends summarised by 12 factor methodology. As processes live and die on a single platform, containers and pods live and die on distributed platforms. Ansible manages the interface of cloud providers both public and private. Hence, you push the full stack build – Kubernetes manages the pod’s of containers entire lifecycle. Rinse and repeat. The microservices architecture was born. However, its simplicity introduces easy violations of the fallacies of distributed computing.

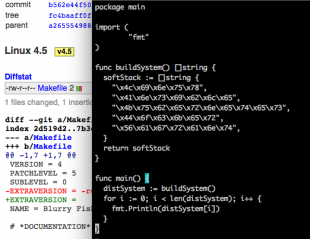

Version control systems, while a well-known problem with several choices, became even more distributed with the introduction of Git. Further, service providers like Github and Bitbucket offer free storage and paid service. Orchestration of virtualisation platforms was introduced by Hashicorp’s Vagrant. Today, a small team can develop on virtualised instances on their own workstation managed by code pushed to a central Git repo.

Updating best practice

BIS are currently undergoing organisational change to establish best practice for developing digital services. As such, current stable trends in distributed systems architecture that are revolutionary are in the process of being adopted in order to provide the most efficient, maintainable and cost-effective implementations possible.

The developer installs Virtualbox, Git and Vagrant. The developer then checks out a Git repository and issues a `vagrant up`. The entire development stack which mirrors production is built in a matter of minutes. The developer writes code and checks the code into Git. Post-commit hooks from Git inform Jenkins of the change. Jenkins checks out the changes and performs functional testing on the entire stack. If all succeeds, the artefact is pushed to the registry for further testing or deployment. If it fails, it informs the developers.

Operations personnel manage production and preproduction environments with the same Ansible playbooks using the remote APIs used to automate the full stack build for functional testing by Jenkins. In order to abstract the provider’s API endpoints and provide flexibility for unsupported providers, Ansible is great open-source software.

The provider selected offers an Openstack environment. Openstack offers its own API; however, there is an optional AWS drop-in replacement. This is convenient in hybrid environments where it may be beneficial and convenient to use AWS for “out of band” development. This is convenient for several reasons. However, Openstack is open-source software with several supported professional releases available for hosting providers as well as in-house implementation. Regardless, Ansible has native support and plugins available for several remote API implementations.

You are more than welcome to follow our progress at https://github.com/BISDigital/infrastructure. All constructive criticism and suggestions are welcome.

Leave a comment